- Geekflare Newsletter

- Posts

- AI Is Getting Smaller, Smarter, and More Serious

AI Is Getting Smaller, Smarter, and More Serious

[INSIDE] Smaller models beat giants, AI enters national labs, and emotions get regulated

Hey folks,

It’s Monday, so let’s quickly catch up on the some of the biggest and most interesting AI updates from the past few days.

GPT-5.2 Takes the Lead in Scientific Reasoning

OpenAI’s GPT-5.2 has topped the new FrontierScience benchmarks, outperforming models like Claude Opus 4.5 and Gemini 3 Pro in expert-level scientific reasoning. These benchmarks focus on olympiad-style problems and advanced research scenarios rather than general conversation.

According to reporting by Axios, GPT-5 has also demonstrated the ability to assist with novel laboratory experiments under controlled conditions, while following safety protocols. While still early, this points toward AI playing a more active role in scientific research rather than remaining just an analytical assistant.

Nvidia’s NitroGen Rethinks Gaming AI

Nvidia has introduced NitroGen, an open vision-action model trained on 40,000 hours of gameplay across more than 1,000 commercial games. Unlike traditional game-playing AI, NitroGen learns directly from pixels and actions, without reinforcement learning.

Because it can generalise across games, NitroGen isn’t just about entertainment. The same approach could be applied to simulation training, robotics, and virtual environments, where learning from visual input is critical.

Molmo 2 Shows Efficiency Can Beat Scale

Ai2 has released Molmo 2, an 8-billion-parameter multimodal model that outperforms its own 72B predecessor on several vision tasks. In areas like video tracking, temporal understanding, and pixel-level grounding, Molmo 2 also rivals much larger competitors such as Gemini 3.

What stands out here is efficiency. These gains come without massive hardware requirements, meaning advanced vision tasks can now run on modest setups. It’s another data point suggesting that smarter training and architecture are starting to matter more than sheer model size.

China Moves to Regulate “Emotional” AI

China’s cyber regulator has drafted new rules targeting AI systems that simulate human emotions. The proposal requires companies to implement addiction-prevention measures, add security safeguards, and intervene when users display extreme emotional distress. Harmful or manipulative content must also be blocked.

The move highlights growing global concern around AI’s psychological and emotional influence, and shows how regulation is starting to focus not just on data and security, but on how AI affects human behaviour.

Google DeepMind Goes National

Google DeepMind has announced a partnership with all 17 US national laboratories, with deployments planned from 2026. The collaboration will bring frontier AI tools such as AlphaEvolve for drug discovery and AlphaGenome for genetics into national research workflows.

The scope goes beyond biology, extending into materials science, energy research, and weather forecasting. This marks a shift from isolated AI breakthroughs toward national-scale scientific collaboration, where advanced models become part of public research infrastructure.

That’s today’s Monday’s AI News roundup.

See you tomorrow with new new AI tool and tutorial.

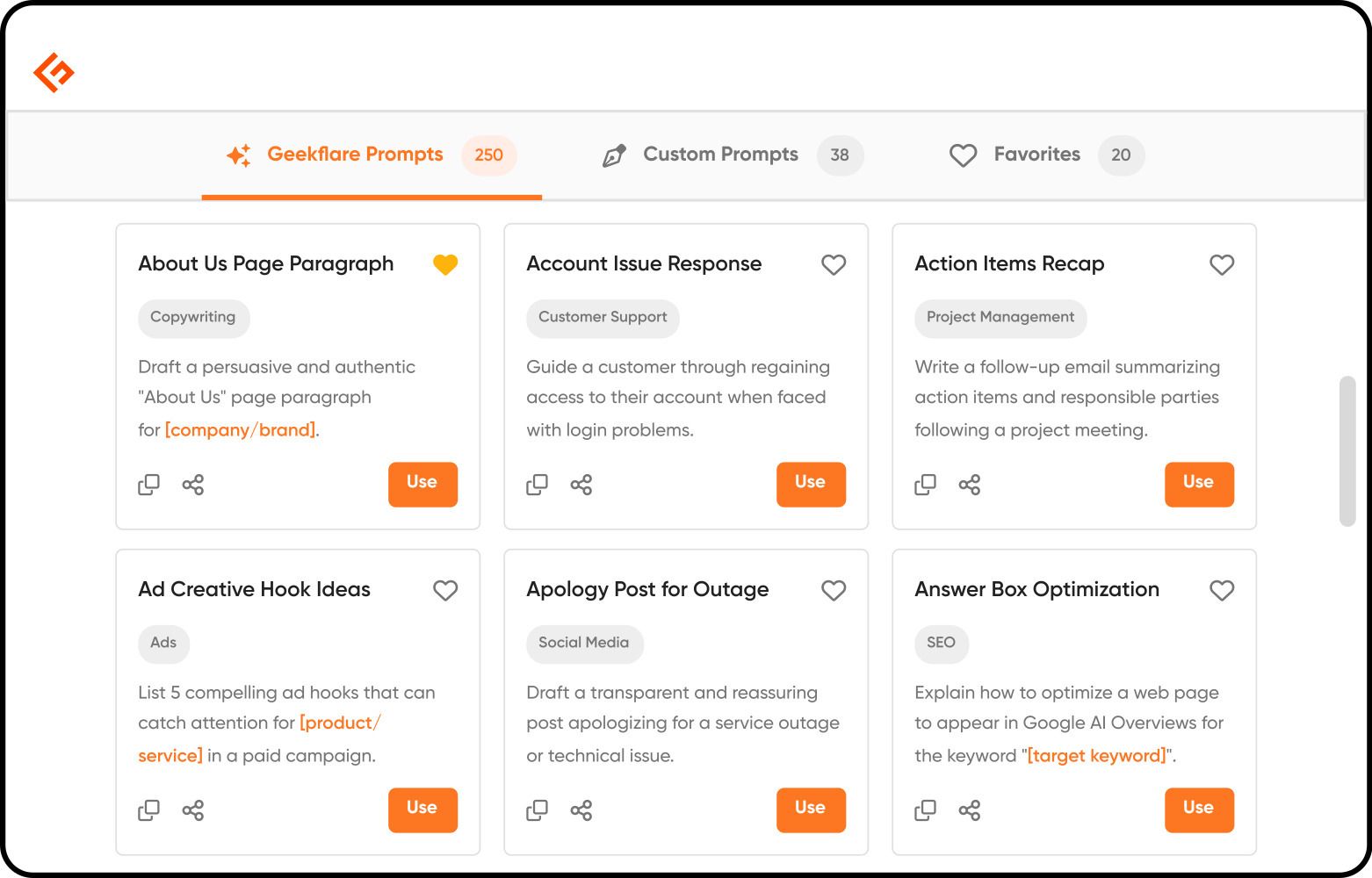

Curated Prompt Library

Kickstart any task with our Curated Prompt Library, packed with ready-to-use prompts for everyday work. You can also create your own custom prompts, save them privately, and share them with your team, so everyone stays consistent, faster, and on the same page.

How to Connect Multiple AI API Keys in One Dashboard (Step-by-Step)

Cheers,

Keval, Editor

Reply